At present, there are many posts related to esProc SPL on the Internet, such as solution introduction, test report, case sharing, but most of them only involves a certain aspect, and hence it is still difficult for readers and users to understand esProc SPL in an all-round way. This article provides a comprehensive introduction of esProc SPL, allowing you to recognize and understand it on the whole.

esProc SPL is a data analysis engine with four main characteristics: low code, high performance, lightweight and versatility. To be specific, SPL allows you to write simply, and makes the running speed faster; SPL can be used either independently or embedded in applications; SPL is suitable for a variety of application scenarios. Analyzing the data through esProc SPL can reduce the overall application cost by several times compared with SQL-represented traditional technologies. Details will be given below.

What is esProc SPL?

First let’s explain what esProc SPL is.

As a computing and processing engine for structured and semi-structured data, esProc SPL can be used as an analysis database or a data computing middleware, and is mainly applied to two data analysis scenarios:offline batch job and online query. It is worth mentioning that, unlike common analysis database on the market, esProc SPL is neither SQL system nor NoSQL technology (such as MongoDB, HBase), and instead, it adopts self-created SPL (Structured Process Language) syntax, which is simpler in coding and higher in running efficiency compared with existing data processing technologies.

What pain points does esProc SPL solve?

SPL mainly solves data problems, including hard to write, slow to run and difficult to operate and maintain. Here below are some examples.

Currently, many industries need to do batch job, and this job is generally done at night when normal business ends. Therefore, there will be a fixed time window, and this job must be completed in the window, otherwise it will affect the normal business. However, the pressure of batch job will increase as the business accumulates. Since the time window is fixed, sometimes the batch job cannot be completed within the time window with the increase of business and data volume, especially on critical dates such as at the end of a month/year.

When querying a report, there will always be several important reports that are slow to query, taking three or two minutes or even longer, even after several rounds of optimization, the query effect is not improved significantly, causing users to get angry. Sometimes when the number of users querying the report increases, and the time span they select is longer, it is even more difficult to find out the result.

In practice, we often see very long, extremely complex SQL code. Not only is such code nested with N layers, but it needs hundreds of lines to write a statement. For such code, it is hard to write, and impossible to modify, and even the programmer himself is confused after a few days. As for the stored procedure, the situation is worse, sometimes a stored procedure reaches tens or hundreds of KBs in size, making it very hard to write and maintain.

Although some complex calculations can be written with stored procedure, and it is simpler than JAVA, the stored procedure cannot migrate the application, and over-reliance on it will cause problems to application framework such as failure to extend the application and high coupling.

Many kinds of data sources are present nowadays, for the databases alone, there are many kinds. Besides, there are many other data sources like NoSQL, text, Excel, JSON. If you want to use them in a mixed way, it will be more difficult. Specifically, it is not worth importing them all into the database because this method not only causes poor data real-time, but occupies the space of database, putting more pressure on the database; on the contrary, if you don’t load them into database, you have to do hard coding, which is very difficult. As a result, you will be in a dilemma.

Generally, esProc SPL has the ability to solve the problems including slow batch job, slow query, high database pressure, difficult to code and maintain in SQL, mixed calculation of multiple data sources, unreasonable application framework.

We list more problems here below:

- Slow batch jobs can not fit in certain time window, being strained especially on critical summary dates

- Being forced to wait for minutes for a query/report, the business personnel become angry

- More concurrencies, longer the query time span, the database crashes

- N-layer nested SQL or stored procedures of dozens of KBs, programmer himself is confused after a few days

- Dozens of data sources like RDB/NoSQL/File/json/Web/…, cross-source mixed computation is highly needed

- Separate the hot data and cold data into different databases, it is hard to perform real-time queries on the whole data

- Too much relied on the stored procedures, the application cannot migrate, framework is hard to adjust

- Too many intermediate tables in the database, exhausting the storage and resources, but dare not to delete them

- Endless report demands in an enterprise, and how can the cost of personnel be relieved?

Of course, for esProc SPL-targeted scenarios, corresponding technologies are also available. So, what are the counterpart technologies of esProc SPL?

The most important one is the database and data warehouse that use SQL syntax and are applied to OLAP scenarios. For example, the common relational databases including MySQL, PostgreSQL, Oracle, DB2, etc.; the data warehouses on Hadoop like Hive, Spark SQL; new MPP and cloud data warehouse such as Snowflake; some commercial all-in-one database machine like Oracle’s ExaData.

From a development language perspective, SPL can replace some data analysis and statistical technologies such as Python, Scala, Java and Kotlin.

Compared with the above-mentioned technologies, esProc SPL has the advantages of low code, high performance, lightweight and versatility. Specifically, SPL is more concise and simpler than Python and SQL in implementing calculation, especially complex calculation, in other words, SPL code is shorter; esProc SPL provides a large number of high-performance algorithms and high-performance storage, making it possible to run faster; esProc SPL can be used either independently or integrated into applications, and has the ability to compute directly on multiple data sources, and hence it is more lightweight and open; In addition to conventional computing ability, SPL also provides many functions including matrix, fitting and even AI modeling, and most data tasks can be easily implemented in SPL system, and thus SPL is more versatile.

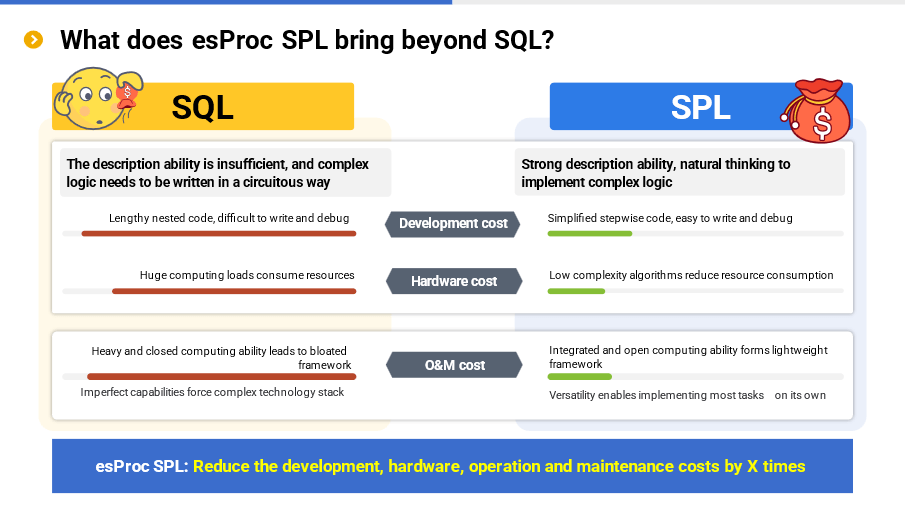

The main counterpart technology of SPL is still SQL, after all, SQL is a most widely used technology in the field of data analysis. So, what does esProc SPL bring beyond SQL?

Looking at SQL from the perspective of modern complex business and big data, you will find that the computing and description abilities SQL are insufficient. Due to the lack of necessary data types and calculation features (such as ordered calculation), SQL often has to implement complex calculation in in a multi-layer nesting and circuitous way, this way causes two problems.

The first is the development cost. We often see a SQL code with over a thousand lines in practice. Once the calculation logic becomes slightly complex, it has to write a long and multiply-layer nested code, which is not only difficult to write and debug, but the programmer himself cannot understand what the code he wrote means after a period of time, this will inevitably lead to an increase in development cost. In contrast, SPL provides rich data types and calculation features, greatly enhancing its computing and description abilities. In addition to a more agile syntax system, SPL also advocates step-wise coding, allowing you to implement complex calculation logic according to natural thinking of “multi-step”, and hence it is easy to code and debug and significantly reduces development cost.

The second is the performance caused by complex SQL coding. Although more efficient way is available, it cannot be implemented in SQL, and instead it has to use slow algorithms. If you want to achieve a desired performance index, you need to add more hardware, resulting in an increase in hardware cost. SPL encapsulates a lot of high-performance algorithms (and storage) and needs less hardware to achieve the same performance, so the hardware cost is effectively reduced. Below you will find many cases where SPL reaches or surpasses SQL performance with less hardware.

Since the computing system of SQL (database) is closed, and data can only be calculated after being loaded into database, and usually, database can only be deployed independently, it leads to a bloated and heavy framework; In addition, the computing ability of SQL is actually imperfect, and SQL is not suitable for handling some complex scenarios independently, and it has to adopt other technologies like Python, Java to make up for its shortcomings. However, these completely different technologies will increase the complexity of technology stack. Heavy and bloated framework and complex technology stack dramatically increases the O&M cost. By contrast, SPL is more open in computing ability, and able to calculate directly on various data sources, and supports independent or integrated use, and its framework is lighter. Moreover, SPL offers comprehensive functions, making it easy to implement complex computing, and making it possible to accomplish most tasks without other technologies, and its technology stack is simpler. Lightweight framework and simply technology stack make O&M cost lower.

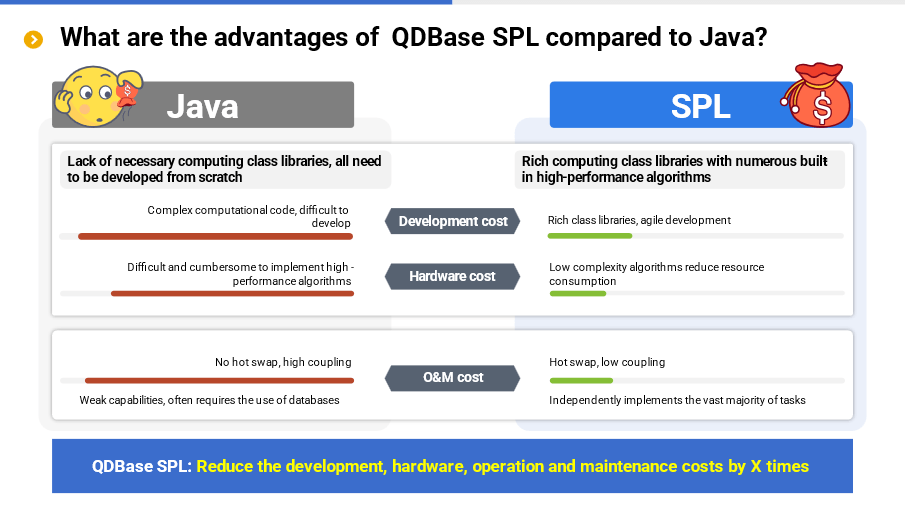

SPL also has significant advantages over Java. Java is a full-featured programming language, and can handle any data computing task in theory. However, due to its too native nature and lack of necessary computation libraries, all computing tasks need to be developed from scratch, resulting in a difficulty to implement.

Especially for high-performance algorithms, it is more difficult to implement in Java. Even if programmers take a great effort to work it out, the performance is pretty poor. Therefore, not only is the development cost high, but the performance is too low. As a result, the hardware cost is increased.

In addition, Java has some shortcomings in practice. For example, it is difficult to achieve hot swap as a compiled language; tight coupling occurs since different applications/modules need be deployed together with main application. These shortcomings have a significant adverse impact on data analysis scenarios that frequently undergo changes. To avoid these shortcomings, programmers often use SQL together with Java in practice, for the reason that SQL is simpler and more convenient for many calculations. However, in doing so, not only does the SQL problem still exist, but it also brings new Java problem, resulting in complex technology stack, a difficulty to use, and high O&M costs.

In contrast, SPL does not have these problems, because it provides rich computing libraries, making it easy to implement calculation tasks, and offers many low-complexity algorithms to guarantee performance. In addition, as an interpreted language, SPL naturally supports hot swap, and coupling problem will never occur. Compared with SQL, SPL has a greater advantage over Java.

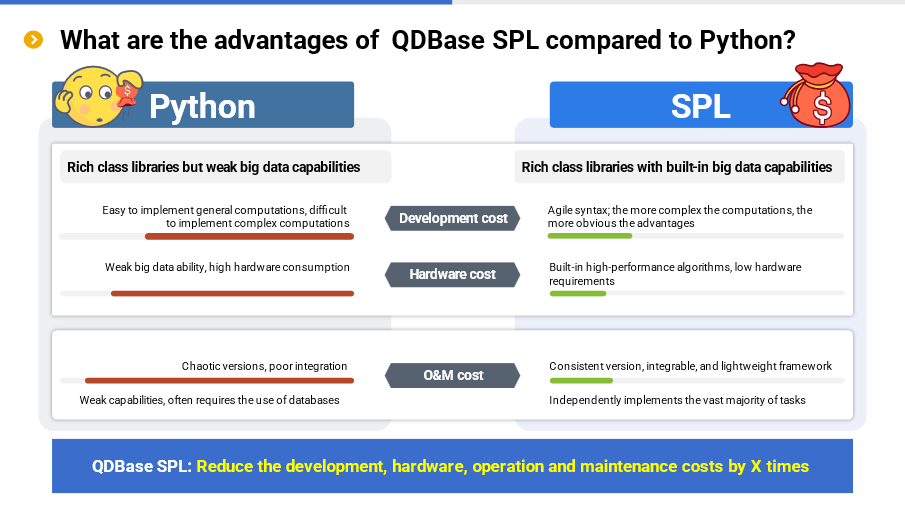

As for Python, some problems exist in processing structured data. Python (Pandas) itself provides rich computing libraries, and it is easy to code in Python for many simple calculations, making Python basically equivalent to SQL. However, for some slightly complicated calculations, it is difficult to code in Python, and the development cost is still high.

In addition, Python is weak in processing big data. Since Python does not provide a cursor type for external storage, it would be cumbersome and inefficient to process the data exceeding memory capacity, resulting in increased hardware cost.

Moreover, Python has the problem of version chaos. Incompatibility between different versions will lead to high usage and O&M costs and, Python is very poor in integration, making it difficult to be combined in applications, and hence it often needs to deploy separate service, yet this will increase O&M costs. Like Java, Python is also frequently used together with SQL in practice, and likewise, the existing SQL problem still exists while new problem arises.

The advantages of SPL in terms of development and performance have been described a lot above. In addition, SPL has many other advantages: no version issue; good integration makes it possible to integrate in applications seamlessly; fully-featured; simpler technology stack; no need to resort to other technologies; lower O&M costs.

Overall, SPL can achieve a cost reduction of several times in development, hardware, and O&M compared to SQL, Java, and Python.

Why esProc SPL works better

From the above cases, we can see that SPL has clear advantages over SQL in terms of code efficiency and computing performance. Why? Is there anything special about SPL?

However, the question should be asked the other way around, that is, why SQL does not work well?

Let’s start with an example to explain why it’s difficult to write in SQL: calculate the maximum consecutive days that a stock keeps rising.

SELECT MAX(ContinuousDays)

FROM (SELECT COUNT(*) ContinuousDays

FROM (SELECT SUM(UpDownTag) OVER ( ORDER BY TradeDate) NoRisingDays

FROM (SELECT TradeDate,

CASE WHEN Price>LAG(price) OVER ( ORDER BY TradeDate)

THEN 0 ELSE 1 END UpDownTag

FROM Stock ) )

GROUP BY NoRisingDays )

SQL adopts a four-layer nested code, which is more complicated overall. This problem was once used as a recruitment test of our company, the pass rate was less than 20%. Since this test question was too difficult, we modified it: give the statement and ask the candidate to find its objective. Unfortunately, the pass rate was still not high. What does it tell us? It tells us that once the calculation logic becomes slightly complex, SQL will become difficult in both understanding and writing!

In fact, this problem can be easily solved by following natural thinking, you can do it in a few very simple steps: i)sort the records by trading day; ii) get the intervals of continuous rise and fall; iii) count the number of days of the maximum interval of continuous rise. However, since SQL doesn’t support ordered operation sufficiently and doesn’t provide ordered grouping directly, it has to turn to a multi-layer nesting and roundabout way, resulting in a difficulty in understanding and writing SQL code. This calculation problem is not a rare problem, there are many more complex calculations in reality, and those SQL codes with over a thousand lines are exactly to solve such problems.

Now let’s take another example to explain why SQL can’t run fast: get the top 10 from 100 million rows of data.

SELECT TOP 10 * FROM Orders ORDER BY Amount DESC

As we can see that although this code is not complex, it has the keyword ORDER BY, which means doing a big sorting on all data first, and then taking the TOP 10. The big sorting is very slow, involving multiple times of swaps between memory and external storage. If this statement is executed according to its superficial meaning, the efficiency will be very low. Actually, there is a faster method without full sorting, and the only thing you need to do is to keep a set containing 10 largest numbers, and you can get the result by traversing the data only once. But SQL cannot describe such algorithm. In this case, you can only rely on the optimizer of the database. For simple calculations, most databases have the ability to optimize in practice, and won’t really do full sort, so it can be calculated quickly. But, if the calculation becomes complex, the optimization engine will get confused and has to execute a slow algorithm. For example, the following code is to get the in-group Top 10:

SELECT * FROM (

SELECT *, ROW_NUMBER() OVER (PARTITION BY Area ORDER BY Amount DESC) rn

FROM Orders )

WHERE rn<=10

There is a big difference between this SQL code and the previous one. This code needs to use the sub-query and window functions to implement the calculation in a roundabout way. For such complex calculation, the optimization engine of the database cannot optimize and has to perform sorting, and as a result, it is very slow. In an actual test, we found that calculating TopN of a grouped subset in Oracle is 21 times slower than calculating TopN of a whole set. In our opinion, when calculating in-group TopN, the performance should have only dropped a little because only one condition (grouping) is added, but the result was far from what we expected. Therefore, we think that Oracle probably did sorting when calculating in-group TopN, resulting in a sharp drop in performance (whatever, we don’t have the source code to confirm) and making the optimizer fail.

Then, how SPL solve these?

For the first example:

Stock.sort(TradeDate).group@i(Price<Price[-1]).max(~.len())

SPL provides ordered grouping. Although the implementation idea is the same as that of the previous SQL code, it is very concise to express.

For the second example:

SPL regards TopN as the aggregation operation of returning a set, avoiding full sorting; The syntax is similar in case of whole set or grouped subset, and there is no need to take the roundabout approach. In most cases, writing simple and running fast are the same thing actually. If the code is written simply, it will run fast, and conversely if the code is written too complex it will not run fast.

Let’s make another analogy, calculate 1+2+3+…+100. Ordinary people will adopt the most common method, that is, 1+2=3, 3+3=6, 6+4=10…; while Gauss found that 1+100=101, 2+99=101…,50+51=101, and that there are fifty same sums (101) in total, so he multiplied 50 by 101 and quickly got the result. I’m sure this story is not new to anyone since we read it in elementary school. We all think that Gauss is so clever that he thought of such an ingenious solution, however, it is easy for us to overlook one point: in the days of Gauss, multiplication was already available. We know that multiplication was invented later than addition, and if multiplication had not been invented in those days, he wouldn’t have found this way to solve this problem so quickly no matter how clever Gauss is. Now let’s get back to our topic, SQL is like an arithmetic system with addition only, if you want to solve the continuous adding problem, you have to add them one by one, resulting in long code and inefficient calculation. In contrast, SPL is equivalent to the invention of multiplication, it simplifies the writing and improves the performance.

The difficulties of SQL stem from relational algebra, such theoretical defects cannot be solved by engineering methods; SPL is based on a completely different theoretical system: “discrete dataset model”, and provides more abundant data types and basic operations, and hence it has more powerful expression ability.

Does it mean that only the programmers as clever as Gauss can use SPL?

Not really, SPL is prepared for ordinary programmers, and in most cases, they can write correct code just by following natural thinking. On the contrary, if SQL is used, they often have to implement in a roundabout way when the calculation becomes slightly complex, and cannot figure it out if he is not an experienced programmer. In this sense, SPL is simpler than SQL. However, if you want to master SPL, you do need to learn more. Don’t worry, for SPL-related knowledge, you have already learned some of them (such as algorithms and data structures you learned in college), as for the rest you don’t know, clever programmers have already summarized the knowledge points (not many), and all you need to do is just to learn the summarized points. Once you master these points, you will be able to handle complex problems with ease.

In practice, there are many scenarios that SQL can’t handle. Let’s give a few examples:

In the funnel analysis for the user behavior transformation of e-commerce sector, it needs to calculate the user churn rate after each event (like page browsing, searching, adding to cart, placing an order and paying). The analysis is effective only when these events are completed within a specified time window and occur in a specified order. Describing such complex order-related calculations in SQL is cumbersome, and even if the code is written, it is not efficient, not to mention optimize.

For the above-mentioned complex multi-step batch job case on big data, some complex procedures need to be done with the help of cursors, however, cursor reading is slow and cannot calculate in parallel, wasting computing resources. During the multi-step procedural calculation, it needs to repeatedly buffer the intermediate results, resulting in a very low efficiency and a failure to complete the batch job within the specified time window.

In multi-index calculation on big data, it needs to perform the calculation of hundreds of indexes at one time and use the detailed data many times, during which association is also involved, SQL needs to traverse data repeatedly; it involves mixed calculation of large table association, conditional filtering, grouping and aggregation, and deduplication, as well as real-time calculation with high concurrency. All these calculations are hard to implement in SQL.

Due to the limitation of space, we only present the SQL code for e-commerce funnel calculation below to feel its complexity.

with e1 as (

select uid,1 as step1,min(etime) as t1

from event

where etime>= to_date('2021–01–10') and etime<to_date('2021–01–25')

and eventtype='eventtype1' and …

group by 1),

e2 as (

select uid,1 as step2,min(e1.t1) as t1,min(e2.etime) as t2

from event as e2

inner join e1 on e2.uid = e1.uid

where e2.etime>= to_date('2021–01–10') and e2.etime<to_date('2021–01–25')

and e2.etime > t1 and e2.etime < t1 + 7

and eventtype='eventtype2' and …

group by 1),

e3 as (

select uid,1 as step3,min(e2.t1) as t1,min(e3.etime) as t3

from event as e3

inner join e2 on e3.uid = e2.uid

where e3.etime>= to_date('2021–01–10') and e3.etime<to_date('2021–01–25')

and e3.etime > t2 and e3.etime < t1 + 7

and eventtype='eventtype3' and …

group by 1)

select

sum(step1) as step1,

sum(step2) as step2,

sum(step3) as step3

from

e1

left join e2 on e1.uid = e2.uid

left join e3 on e2.uid = e3.uid

This is a three-step funnel calculation. SQL lacks order-related calculations and is not completely set-oriented. It needs to detour into multiple subqueries and repeatedly JOIN. Therefore, it is difficult to write and understand, low in performance, and harder to optimize. Only a three-step funnel is presented here, and more subqueries need to be added in case of more steps, and hence the difficulty is evident.

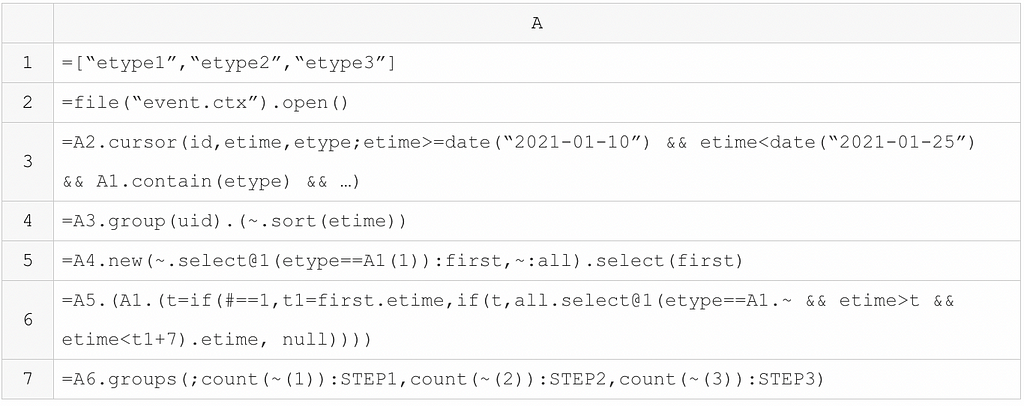

In contrast, SPL code is much simpler:

SPL provides order-related calculations and is more thoroughly set-oriented. Code is written directly according to natural thinking, which is simple and efficient. In addition, this code can handle funnels with any number of steps, the only thing we need to do is to modify the parameter.

This is a simplified real case (the original SQL code has almost 200 lines). The user did not get result after 3 minutes running on Snowflake’s Medium server (equivalent to 4*8=32 cores), while the user run the SPL code on a 12-core, 1.7G low-end server and got the result in less than 20 seconds.

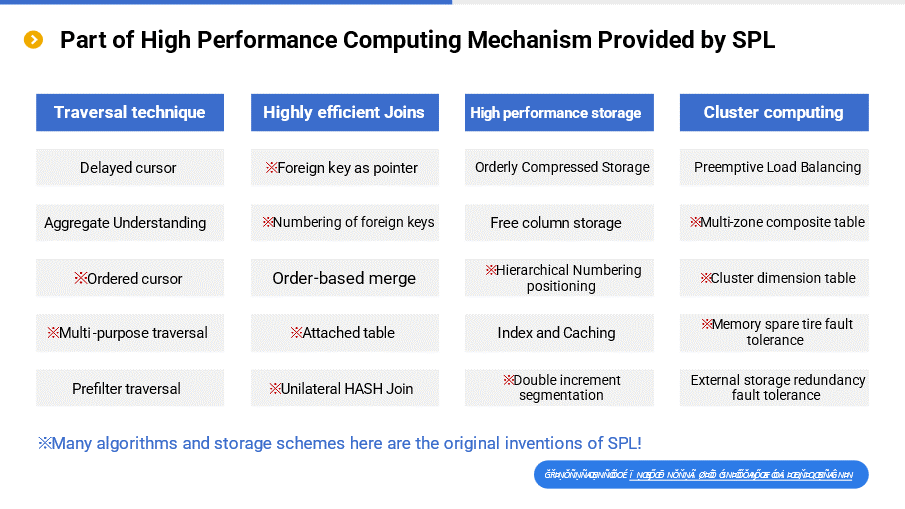

As we mentioned above, SPL is equivalent to the invention of multiplication based on the addition, in fact, SPL invents many “multiplications”. Here below are part of SPL’s high-performance algorithms, among which, many algorithms are the original inventions of SPL.

For example, the multipurpose traversal algorithm can achieve the effect of multiple operations during one traversal; foreign key as pointer can map the foreign key field as the address of the record to which the foreign key points, making it more efficient when using this record again; double increment segmentation can adapt to the rapidly expanding data scale, making it very efficient to store and access the data.

Then, why doesn’t Java work well either?

As mentioned earlier, because Java is too native and lacks necessary data types and computing libraries, any computing task has to be done from scratch, which is very cumbersome. For example, it requires more than ten lines of code to implement a grouping and aggregating task in Java. Although Java 8’s Stream simplifies this type of operation to some extent, it is still very difficult to implement for slightly more complex calculations (there is a big gap even compared to SQL).

It is more difficult to code in Java for calculations with high performance requirements, such as the TopN operation that does not utilize big sorting algorithm, more efficient HASH join algorithm, and ordered merging algorithm. These algorithms themselves are difficult to implement, and Java lacks computing libraries, and hence many application programmers do not know how to solve them and often have to resort to relatively simple but slow algorithms. As a result, the computing speed is even slower than that of SQL, let alone solve these problems.

The performance of processing big data is largely related to data IO. If the IO cost is too high, it doesn’t work no matter how fast the operation speed is. Efficient IO often relies on specially optimized storage scheme. Unfortunately, however, Java lacks a widely used efficient storage scheme, and instead it uses text file or database to store data in general. The performance of database interface is very poor. Although text file is a little bit better, it will consume too much time in parsing the data type, resulting in lower performance.

If Java’s shortcomings in practice are considered, such as difficult to hot swap and tight coupling, Java falls short compared to SQL, let alone surpass SQL to solve the previous problems.

Why does Python still fail to work well?

By analyzing Java, we basically know that many of Python’ shortcomings are similar. For example, it is difficult to implement relatively complex calculations, such as adjacent reference, ordered grouping, positioning calculation, non-equivalence grouping.

For big data computing without corresponding external storage computing mechanism, it will be very difficult to implement in Python. Moreover, Python does not support true parallel processing. The parallel processing of Python itself is fake, which is actually the serial processing for CPU, or even slower than serial processing, and thus it is difficult to leverage the advantages of modern multi-core CPU. There is a Global Interpreter Lock in the CPython interpreter (the mainstream interpreter of Python). This lock needs to be got ahead of executing Python code, which means that even if multiple threads of CPU work in the same time period, it is only possible to execute the code with one thread and multiple threads can only execute the code in an alternately way. Yet, since multiple-thread execution involves complex transactions such as context switching and lock mechanism processing, the performance is not improved but decreased.

Due to the inability of Python to make use of simple multi-thread parallel processing mechanism in one process, many programmers have to adopt the complicated multi-process parallel processing method. The cost and management of process itself are much more complex, and the effect of parallel processing cannot be comparable to that of multiple threads. In addition, the inter-process communication is also very complex, programmers have to give up direct communication sometimes, and use file system to transfer the aggregation result instead, which leads to a significant performance decrease.

Like Java, Python does not provide efficient storage scheme for high-performance computing, so it has to resort to open-format files or databases, resulting in low performance. In many cases, Python is used together with SQL, but it cannot eliminate the problems of SQL. If Python’s problems such as version and integration are considered, we can conclude that Python does not work indeed.

Technical characteristics

We explained the reason why it is simple to code and fast to run in esProc SPL above, that is, the low code and high performance of SPL.

Now let’s take a closer look at the technical characteristics of esProc SPL.

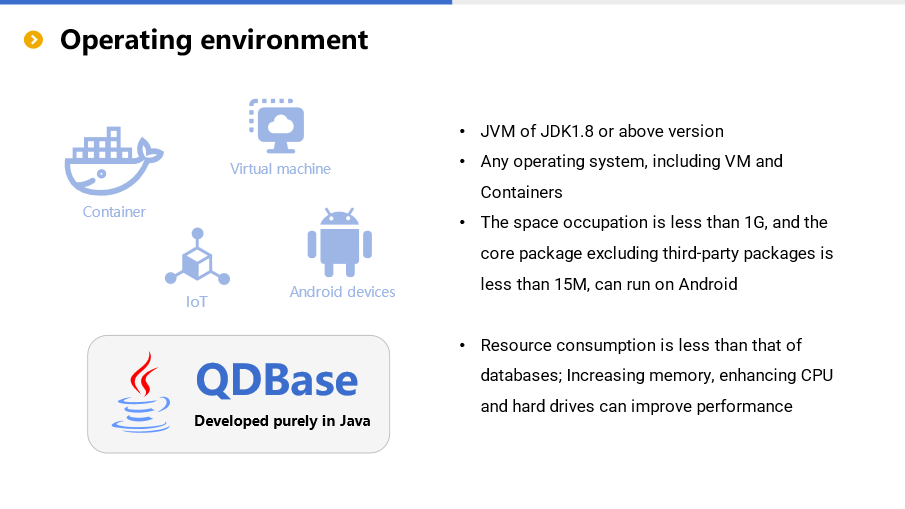

Currently, esProc is a software developed purely in Java, and can run on any operating system under JVM environment of JDK1.8 or higher version, including common VMs and Containers.

After normal installation, esProc takes up less than 1G of space, most of which is occupied by the referenced third-party external data source driver packages. The core package of esProc is less than 15M, making it possible to run even on Android.

Except for JVM, esProc has no other hard requirements on operating environment. The requirements for the capacities of hard disk and memory are related to the computing task and could vary greatly from different tasks. When performing the same commuting task, esProc usually requires less hardware resources than traditional databases (especially for distributed database). Increasing memory’s capacity, choosing a CPU with a higher frequency and number of cores, and utilizing an SSD usually have a positive impact on improving computing performance.

On the leftmost of the framework are the business database and traditional data warehouse (if any). In fact, there may be various types of data sources, and these data can be converted to esProc’s high-performance file storage through “data solidification”, making it possible to achieve higher performance.

Of course, data converting is not a must. For some scenarios that require higher data real-timeness, esProc can read the data and calculate in real time through the interfaces (like JDBC) that data source provides. However, due to the inability of esProc to intervene in the performance of data interface, the computing performance may be different for different data sources, and poor performance may also occur. If users have requirements on both real-timeness and computing performance, then the method of solidifying the cold data and reading the hot data in real-time can be adopted. To be specific, converting the unchanged historical cold data to esProc’s high-performance storage, and reading the hot data of data source and, processing all the data with the help of the mixed computing ability of esProc can meet both requirements at the same time.

The high-performance file storage of esProc will be introduced in detail below.

In the middle part of the framework is esProc Server, which is responsible for actual data processing. esProc Server allows us to deploy multiple nodes in a distributed manner for cluster computing, and supports load balancing and fault tolerance mechanisms. The cluster size of esProc is relatively small (no more than 32 nodes), and more resources are used for computing (rather than management and scheduling). In many cases that have been implemented, esProc can handle the cluster computing scenarios of traditional technologies (MPP/HADOOP) through a single node, and the performance is higher, so there is no need to worry about the problem of insufficient computing ability.

esProc’s SPL script will be distributed on each cluster node, and the script can be developed and debugged remotely on esProc’s IDE, and the data will not be saved or downloaded to local node during debugging, and hence it is more secure.

esProc encapsulates standard interfaces like JDBC/RESTful for application to call. For Java applications, esProc can be directly integrated, and used as in-application computing engine. For non-Java applications, esProc can be called through other interfaces.

From the perspective of the entire framework, in addition to providing simple and efficient data processing ability, esProc is more flexible in use, as it can be used independently or integratedly. Moreover, relatively lightweight computing way makes esProc light to use, not as heavy as traditional distributed technology.

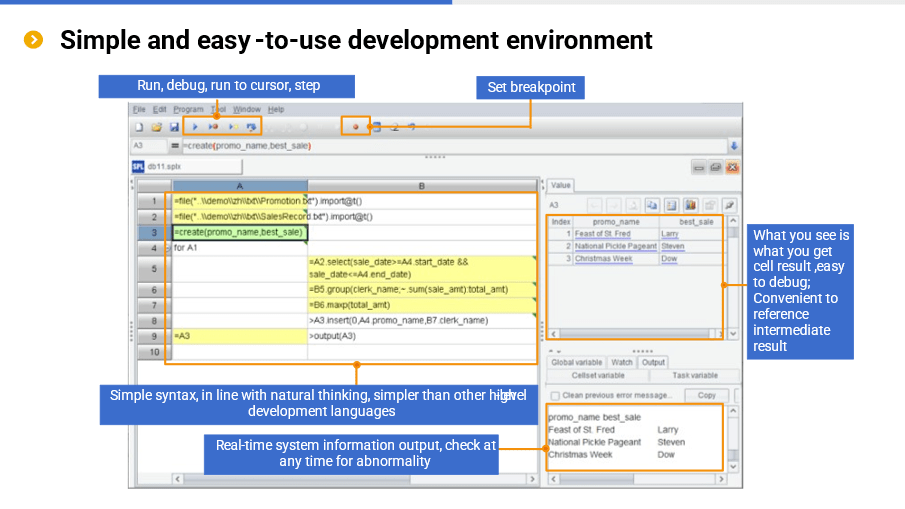

In terms of development and debugging, esProc SPL provides simple and easy-to-use development environment.

In IDE, we can code step by step, and view the running result of each step in real time in the result panel on the right, and there are various editing and debugging functions such as debug, step in and set breakpoint. Easy-to-use editing and debugging functions are also indispensable for low code, which are very different from SQL (and stored procedures), and can significantly reduce development cost. With these features, esProc SPL is also frequently used for desktop analysis and is very convenient.

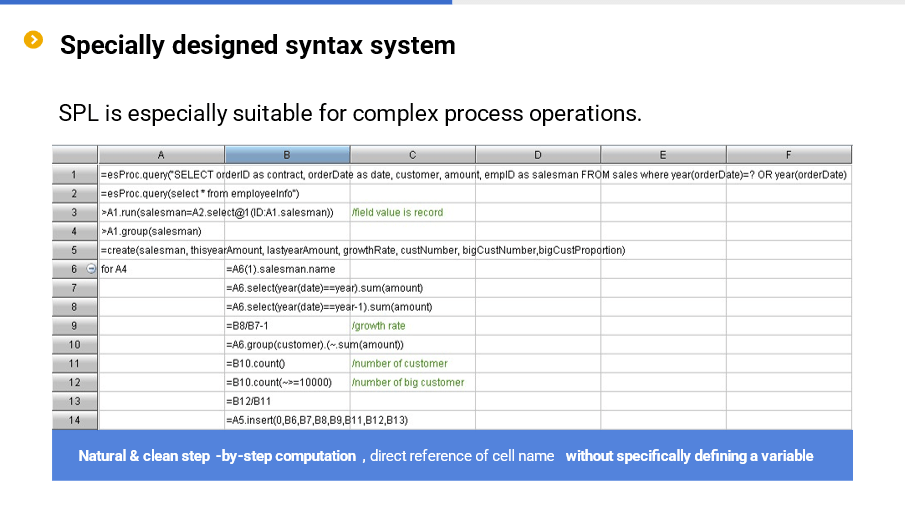

SPL is a specially designed syntax system, and naturally supports stepwise calculation, and is especially suitable for complex process operations. In addition, SPL boasts more complete programming abilities than SQL such as loops, branches, procedures and subprograms. In each step of operation, SPL can reference the result of the previous step with the cell name without defining a variable (of course, variable is also supported).

Moreover, SPL provides a very rich structured data computing library, enabling it to process the string, date and time, and perform mathematical calculation, and read/write the files and databases, and support JSON/XML multi-layer data, and do the grouping / loop / sorting / filtering / associating / set /ordered calculations. The loop function provided especially for sequence (table sequence) can greatly simplify the set operation. SPL also provides the cursor for big data calculation, the channel for reducing the repeated traversal of hard disk, and the parallel computing and distributed computing mechanisms. Furthermore, SPL provides the modeling and prediction functions for AI, as well as external library functions for dozens of data sources such as MongoDB, Elasticsearch, HBase, HDFS and Influxdb.

At present, SPL provides more than 400 functions, and each function contains several options. It is equivalent to thousands of library functions. These functions, together with perfect programming language functions like procedure, loop and branch allow SPL to perform a full range of data processing tasks, which is a typical manifestation of its versatility characteristic.

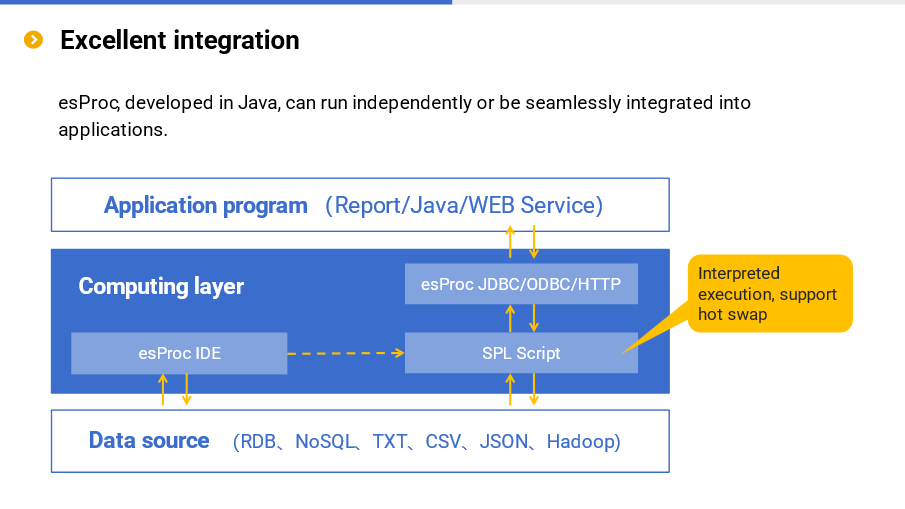

esProc SPL also boasts a very high integration. Developed in Java, SPL can run independently or be seamlessly integrated into applications to serve as an in-application computing engine, and can play an important role in scenarios such as micro-service, edge computing, and report data preparation.

Excellent integration reflects its lightweight characteristic. esProc SPL does not always need an independent server to work (very different from the database), and instead, only embedding the jars can provide powerful computing power for the application. Moreover, the jars are only tens of MBs in size, very small and lightweight, and can be used anytime and anywhere, even can be run on an Android phone.

esProc SPL supports dozens of data sources and boasts the mixed computing ability. Multiple data sources can be calculated directly without loading data into the database. In addition to better data real-time, it can also fully retain respective advantage of diverse data sources. For example, RDB is strong in computing ability but low in IO efficiency, you can make RDB do part of calculations first and then let SPL do the rest calculations; MongoDB is naturally suitable for storing dynamic multi-layer data, you can let SPL calculate multi-layer data directly; File system is not only more efficient in reading and writing, but more flexible in use, SPL can calculate directly based on file, and give full play to the effectiveness of parallel computing.

esProc SPL’s support for multiple data sources reflects its versatility once again. Moreover, since esProc SPL has no metadata, multiple data sources can be accessed directly, and mixed calculation can be performed, and hence esProc SPL is lighter, this also once again reflects its lightweight characteristic.

Currently, esProc SPL can process the following data types:

- Structured text: txt/csv

- Common text, string analysis

- Data in Excel files

- Multilayer structured text: json, xml

- Structured data: relational database

- Multilayer structured data: bson

- KV type data: NoSQL

In particular, esProc provides powerful support for multilayer structured data such as json and xml, far exceeding traditional databases. Therefore, esProc can work well with json-like data sources like mongodb and kafka, and can also easily exchange data with HTTP/Restful and microservice and provide computing service.

In addition, esProc can easily calculate the data in Excel files. However, esProc is not good at handling Excel’s format, nor is it good at processing data such as images, audios and videos.

Furthermore, esProc SPL provides its own efficient data file storage. The private data format not only provides higher performance, but allows us to store data by business category in file system tree directory.

Currently, SPL provides two file storage formats: bin file and composite table.

The bin file is a basic binary data format, which adopts the compression technology (less space occupation, faster reading), stores the data types (faster reading since there is no need to parse data type), and supports the double increment segmentation mechanism that can append the data, making it very easy to implement parallel computing through segmentation strategy, thereby improving the computing performance.

The composite table provides a more complex storage structure. Specifically, it supports mixed row-wise and columnar storage; ordered storage improves compression rate and positioning performance; it supports more efficient intelligent index; it supports the integration of primary table and sub-table to effectively reduce storage and association; it supports the double increment segmentation mechanism, making it easier to implement parallel computing to improve the computing performance.

Since the file-based storage doesn’t require database, and there is no metadata, it makes the use of SPL more flexible, more efficient, more lightweight and cheaper, and better suited to the needs of big data era.

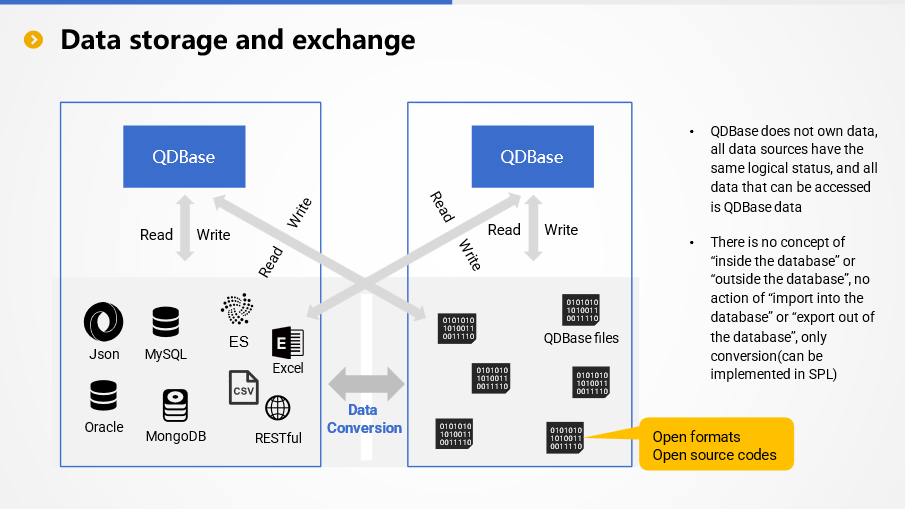

esProc does not have the concept of “warehouse” of traditional data warehouses, nor does it have the concept of metadata and, it does not provide unified management for the data of a certain theme. For esProc, there is no concept of “inside the database” or “outside the database”, and there is no action of “importing into database” or “exporting out of database”.

Any accessible data source can be regarded as the data of esProc and can be calculated directly. Importing into database is not required before calculation, and exporting out of database is also not required deliberately after calculation, as the result can be written to target data source through its interface.

esProc encapsulates access interfaces for common data sources, and various data sources have basically the same logical status. The only difference is that different data sources have different access interfaces, and different interfaces have different performance. Since these interfaces are provided by data source vendors, esProc cannot intervene in their functionality and performance.

esProc designs special-format files (bin file and composite table) to store data in order to obtain more functionalities and better performance. These files are stored in the file system, and esProc does not own these data files technically. esProc has made the file formats public (access code is open source), and any application that can access these data files can read and write them according to a publicly available specification (or based on open-source code). Of course, it is more convenient to read and write directly in SPL.

In this sense, there is no action of “importing into database” or “exporting out of database” when exchanging data between esProc and external data source. However, there may be the action of data conversion, that is, converting external data to esProc format files to obtain more functionalities and better performance, or converting esProc format files to external data for other applications to use. All these conversion actions can be done using SPL.

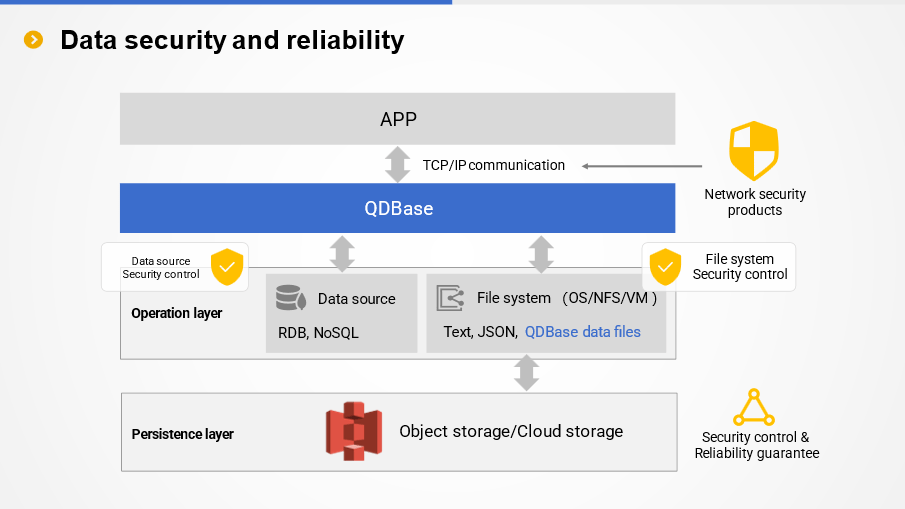

esProc does not manage data in principle, nor is it responsible for data security. To some extent, it can be said that esProc does not have and does not need a security mechanism.

The security of persistent data is the responsibility of the data source itself in principle. For data files in esProc format, many file systems or VMs provide complete security mechanism (access control, encryption, etc.), which can be utilized directly. The cloud edition of esProc also supports retrieving data from object storage services such as S3 before computing, and can also utilize their security mechanisms.

The embedded esProc is in the same process with the main Java application, and only provides computing service for main application. Since esProc doesn’t provide external service interface, there are no security and permission issues. esProc of the independent service process uses standard TCP/IP and HTTP to communicate, and can be monitored and managed by professional network security products, and the specific security measures will be the responsibility of these products.

esProc specializes in computation and is not responsible for the reliability of persistent storage. There are professional technologies and products in this regard as well. esProc tries to follow standard specifications so that it can work with these technologies and products. For example, data can be persisted to highly reliable object storage, and for this reason, esProc provides corresponding interface, allowing us to access these data sources to perform calculation.

esProc is a professional computing technology and does not offer professional security capabilities. The philosophy of esProc is to work with other professional security technologies.

We’ve presented some technical characteristics of esProc SPL above. Now let’s see some esProc SPL solutions applied in more scenarios.

FAQ

Is esProc based on open source or database technology?

We’ve analyzed the shortcomings of existing technologies (mainly SQL) in detail above, which are mainly caused by the theory system behind them. If these theories are still followed, it’s impossible to eliminate such shortcomings fundamentally. For this reason, we invent a brand-new computing model — discrete dataset, and develop esProc SPL based on the model. Since everything is new, and there is no relevant theories and engineering products in the industry to reference, we have to develop from scratch, and any part from the model to code is originally created.

Where can esProc be deployed?

Since esProc is developed completely in Java, it can be deployed in any environment equipped with JVM, including but not limited to VM, cloud server and container. In practice, esProc can be either used independently or integrated into applications. When it is used independently, we need to run a separate esProc server, and can build a distributed cluster; when it is integrated into an application, it is embedded in the form of jars, and regarded as a part of the application (computing engine).

How does application invoke esProc?

esProc provides a standard JDBC driver, and thus it can be seamlessly integrated in a Java application directly. For a non-Java application like .net/Python, we can invoke it via ODBC/HTTP/RESTful interface.

Can esProc be integrated with other frameworks?

esProc can run as an independent service process like a traditional database. In addition, esProc provides standard JDBC driver and HTTP service for application to call, and hence SPL script can be executed in Java applications by sending SPL statements through JDBC. Calling the script code of esProc is equivalent to calling the stored procedure in relational database. For non-Java applications, we can access esProc-provided computing service through HTTP/Restful mechanism.

For applications developed in Java, esProc can be fully embedded, that is, all computing functions are encapsulated in the JDBC driver, and run in the same process as the main application without relying on external independent service process.

Because esProc is software developed purely in Java, it can be completely and seamlessly embedded in various Java frameworks and application servers, such as Spring, Tomcat, and can be scheduled, operated and maintained by these frameworks. For these frameworks, esProc has the same logical status as that of Java applications written by users.

It should be noted that for computing type frameworks like Spark, while esProc can be integrated seamlessly, it does not make practical sense. esProc requires converting data to SPL-specific data objects before calculating, which is not only time consuming, but makes the data objects in original computing framework meaningless, resulting in a failure to combine the advantages of the two types of data objects. The key point of these computing frameworks is their data objects (such as Spark’s RDD). If such data objects can no longer be used, the computing frameworks themselves will be meaningless. Since the computing ability of esProc far exceeds that of common computing frameworks, there is no need to use these frameworks anymore.

In particular, for stream computing frameworks (such as Flink), esProc cannot play a role even if it can be integrated. esProc has independently served stream computing scenarios for many times, and does not need the support of stream computing frameworks at all. For the same amount of computation, esProc typically consumes resources one order of magnitude lower than these stream computing frameworks, and has richer functionality.

Can esProc run based on the existing database?

Yes, of course! esProc supports dozens of data sources, including database, text, excel, json/xml, webservice, etc. Moreover, esProc can perform association and mixing operations between database and other data sources (such as text).

However, for data-intensive tasks (most tasks related to big data belong to such task), reading the data from database will consume a lot of time due to poor I/O performance of database. Even if the calculation time of esProc is very short, the overall time is still very long, resulting in a failure to meet performance requirements. Therefore, for scenarios that require high performance, you need to move a large amount of cold data from database to esProc’s high performance file, only in this way can an optimal performance be obtained and, the remaining small amount of hot data can still be stored in the database, and real-time full data query can be easily implemented by means of the multi-source mixed computing ability of esProc.

Where does esProc store data?

esProc stores data as files, supports open text format, and also provides a high-performance private file format. Since files are more open and flexible, it is easier to design high-performance storage schema based on files, and improve computing performance through parallel computing. esProc supports the file system on any OS, including local file system and NFS. Therefore, esProc has a natural ability to implement separation between storage and computation, unlike database that is difficult to separate storage and calculation as it needs to bypasses the file system and operate directly hard disk.

How to ensure the high availability of esProc?

esProc supports distributed computing, allowing multiple nodes to work together. In practice, however, distributed computing is rarely used, as esProc can handle most tasks and meet the expected response speed through a single node, except for high-concurrency scenarios.

The upcoming cloud edition of esProc (supporting private deployment) will support automatic elastic computing. When the data request volume increases, new VM will be automatically enabled to compute, and when the data request volume decreases, the idle VM will be automatically shut down.

Embedded esProc only provides computing service for main application, and cannot provide external service, nor is it responsible for the reliability of external service. The reliability of external service is the responsibility of the main application and the framework.

The esProc of the independent process supports the hot standby mechanism, and JDBC will choose one process with lighter workload among the currently working service processes to perform calculation. esProc’s distributed computing also provides fault tolerance. However, since the design goal of esProc is not a large-scale cluster, once a node failure is found during the calculation, the task will be declared failed. esProc’s fault tolerance is only limited to allowing the cluster to accept new tasks when a node failure is detected, which is only suitable for small-scale clusters.

The service process of esProc currently does not provide automatic recovery function after failure, and the failure needs to be handled by administrators. However, it is not difficult to design a monitoring process to implement the automatic function.

The elastic computing mechanism of esProc (cloud edition) will avoid the currently failed nodes when allocating VMs, hereby achieving high availability to a certain extent.

How to extend the functionalities of esProc?

esProc is a software written in Java, and offers an interface to call the static functions written in Java, thus extending the functionality of esProc. esProc also opens up the interface of custom functions, allowing application programmers to write new functions in Java and move them in esProc for use in SPL.

What are the weaknesses of esProc?

Comparing with RDB:

The metadata capability of esProc is relatively immature.

esProc is not the DBMS, and does not have the concept of metadata in the traditional sense, and data is mostly stored, managed and used as the form of files. Most operations start by accessing data sources (files), which is a bit more troublesome than the database for simple calculations, but more advantageous for complex calculations.

Comparing with Hadoop/MPP:

The scale of Hadoop/MPP database cluster is relatively large (although limitations are usually laid on the node number of MPP cluster), and mature experiences on the utilization, O&M management of such cluster are already available. In contrast, esProc cluster targets to small- to medium-scale, usually a few to a few dozen nodes, and even so, there is still a lack of practical experience (relative to Hadoop/MPP). In practice, esProc cluster is rarely employed because customer can often achieve or even surpass the effect of their original cluster on only one esProc node, which further leads to the relative lack of experience in utilizing esProc cluster.

Comparing with Python:

We are currently developing the AI functions of esProc SPL, and improving its AI modeling and prediction functions gradually, but these functions are still far from Python’s rich AI algorithm libraries.

How about the compatibility between SPL and SQL?

esProc is not the computing engine of SQL system, and currently only supports simple SQL that does not involve a large amount of data, and cannot ensure the performance. In the big data computing scenarios, it can be considered that esProc does not support SQL, and of course it will not be compatible with any SQL stored procedure.

esProc will develop the dual-engine supporting SQL in the future, but it is still difficult to ensure high performance and meet the computing requirement of big data, it only makes it easier to migrate existing SQL code to esProc.

Is there a tool to automatically convert SQL to SPL?

Given the widespread use of SQL, especially when remoulding (optimizing) existing system, it is natural to wonder whether SQL can be automatically converted to SPL to reduce migration cost.

Unfortunately, it can’t.

Although database can be used as the data source of SPL, high performance cannot be implemented based on database (mainly due to the storage), so automatic conversion of SQL to SPL is impossible based on database. More importantly, due to the lack of sufficient description ability, SQL cannot implement many high-performance algorithms. In this case, forcibly converting SQL to SPL can only ensure the function, and the performance is usually much worse.

It is worth mentioning that SPL does not provide such a strong automatic optimization mechanism as SQL. After decades of development, many databases have strong optimization engines. When facing relatively simple slow SQL statements, the engines can “guess” their true intentions, and optimize them automatically so as to execute in a high-performance manner (such as TopN mentioned earlier); In contrast, the automatic optimization function of SPL is insufficient, and we are far from being as rich in optimization experience as database vendors, so we don’t have the ability to “guess” the intention of the statement, and have to execute the code directly. In this case, we can only depend on our programmers to write low-complexity code to achieve high performance.

How difficult is it to learn SPL

SPL is dedicated to low code and high performance. SPL syntax is easy and much simpler than Java, and you can master it in hours and be skilled in weeks. What is difficult is to design optimization algorithms. Fortunately, it is not difficult to learn, and we have summarized the knowledge points as fixed routines. As long as you follow these routines, you can master them and become a master.

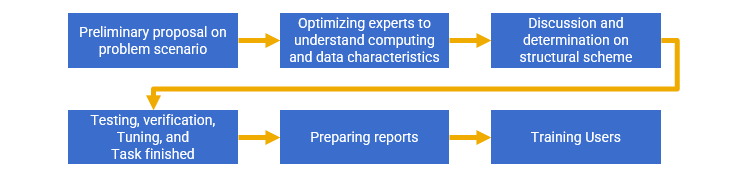

How to launch a performance optimization project

Most programmers are used to the way of thinking in SQL and are not familiar with high performance algorithms. They need to be trained to understand through one or two scenarios. There are not many performance optimization routines (dozens), and less than 10 routines are commonly used. Once you experience such routines, you will learn, and find that algorithm design and implementation are not so difficult. The first 2–3 scenarios will be implemented by users with the help of our engineer. In this process, we will teach users how to solve a problem instead of giving them a solution directly.

Summary

Finally, let’s summarize the advantages of esProc SPL as a data analysis engine.

High performance

In practice, esProc SPL’s performance to process big data is 1–2 orders of magnitude higher than that of traditional solutions on average, and thus the performance advantage is very obvious.

Efficient development

With SPL’s agile syntax and rich computing libraries, as well as procedural characteristics, complex algorithms can be implemented according to natural thinking, thereby making development efficiency higher.

Flexible and open

esProc SPL supports mixed computation of multiple data sources, and can implement HTAP at a minimum cost while effectively utilizing the advantages of multiple data sources. Moreover, esProc SPL can be integrated in applications, making it truly open and flexible.

Save resources

With the support of high computing ability, esProc SPL can achieve business goal with less hardware (single machine can rival a cluster), so it is a more environmentally-friendly technology.

Sharp cost reduction

When these advantages are reflected in the cost, the development, hardware, O&M costs can be reduced by X times.